SLP Chat

This will be a place where therapists can share ideas, problem solve and express concerns. Lets work together to make our jobs easier!

Teresa

What is your S.M.A.R.T. goal?

Looking in my archives, back in September 2012 I wrote an article on SMART goals and asked people to share their own smart goals. At that time I had searched and searched and found no specific examples for speech language pathologists. I would love to know what other SLPs ended up writing for their SMART Goals and if I get enough responses keep a data base to help others. If you want to share your SMART Goal(s) to help others please pass them along. I would also be interested in knowing how your goal was developed, who you worked with, if extra time was given to implement your goal, your caseload and if your goal was achieved. No reason to reinvent the wheel.

Teresa

Just a thought: I was speaking to a non-teacher friend about SMART goals and her comment was “As opposed to DUMB goals”. Do you think the powers that be could have picked a better acronym or do you think they did it on purpose?

Lets be realistic about school wellness programs…then maybe they’ll work

Last week a friend sent me another article on Michelle Obama’s Lets Move Campaign. The article focused a new policies where “unhealthy foods” would not allow to be advertised during the school day. In particular the article referred to the advertising of certain CocaCola company products not being allowed in schools. Keep in mind how much support the Coca-Cola company has given to many causes over the years, especially the Olympics.

“The idea here is simple – our classrooms should be healthy places where kids aren’t bombarded with ads for junk food,” the first lady said. “Because when parents are working hard to teach their kids healthy habits at home, their work shouldn’t be undone by unhealthy messages at school.” M. Obama

I am a little perplexed by what Mrs. Obama is trying to do. I’ve worked in schools for almost 30 years. I’ve never seen junk food advertising in any classroom. I haven’t seen a soda machine in schools for years. It seems as though she is trying to accomplish something that common sense dictated years ago. I’ve never seen advertising of any kind other than occasional vending machines in any school at any time. So basically Mrs. Obama is trying to achieve something that was initiated and successfully addressed at least 20 years ago. It’s also seems contradictory that advertising of “diet” drinks are going to be allowed. Personally I think the chemicals in diet drinks are even less healthy for children. Mrs. Obama, should we be promoting diet drinks to our school children?

I agree with the basic premise of Mrs. Obama’s Lets Move Campaign. When I look around any school I see many more kids that are heavier than they should be. But she is really missing the mark going after the large companies to decreasing their advertising basically because few actually advertise in schools and none on a large scale basis. Mrs. Obama should look at the name of her campaign and focus on getting kids moving. Schools can modify their schedules to extend recess, spend more time outside, make lunch more relaxing (and social) and provide better school lunches. Why focus on taking away something that is hardly there.

If Lets Move wants to support better nutrition in general, it should start a little closer to home. I’d like to know if Michelle Obama has ever seen or eaten a public school lunch? It’s been my experience to see, small portions served on cardboard or styrofoam trays, food that has been in a warmer for hours (yes hours), unappealing choices, mushy or dehydrated food and frankly poor quality food. Food programs at most schools have switched over from having school cooks to food services where the cooked food is shipped in and kept in warmers. Peek in any trash barrel in any school cafeteria and you will see just how much of “school food” is thrown out. When this much food it inedible or unappealing and not consumed, children have to be starving by the end of the school day. This can’t be good for blood sugar levels.

So how much are kids actually moving in a typical school day? This is going to vary from school to school. It’s been my experience that students have anywhere from 30-40 minutes to shove in their lunch wait for everyone to finish and then run out side to play for 15 or so minutes. Schools rarely provide playground equipment and frankly most kids don’t know how to organize games anymore. Recess is usually inconsistent lasting 15-30 minutes at most. Middle schoolers rarely get recess at all. In the winter time students in cold climates are at times confined to their classroom the whole day. Physical education classes meet for an hour once or twice a week. It’s also important to note that even if schools or principals want to increase their students movement time, the demands of Common Core puts significant limits on time allotted for recess and physical education.

How many exercise opportunities do children have outside the school day? Schools have so much to cover that even with the best intentions, schools can teach healthy habits but cannot provide adequate opportunities to exercise. Perhaps Mrs. Obama’s program should put more effort into developing opportunities to exercise outside of the school day and encouraging parents to take advantage of those opportunities. Instead of alienating large corporations such as Coca-Cola use them to help fund new exercise, sports or dance programs. Children especially from less affluent communities have fewer opportunities to join organized teams, exercise programs or lessons.

Children from all socioeconomic levels are spending an inordinate amount of time playing video games. This is hard to believe but some students actually believe they are playing sports when playing video games. Children are now being raised by video gamers and instead of shooting hoops in the backyard, parents and children now play video games together. If they’re playing video games they are usually not getting much exercise. Perhaps if Michelle Obama and Let’s Move is so adamant about going after corporations that produced less than nutritious food then perhaps they should also go after video game manufacturers since video games keep kids from moving. An awful lot of computer games are used in schools too, some with little to no educational value.

Let’s Move has been in place for 4 years now and other than absurd wellness programs that have infiltrated schools I haven’t seen many changes in students physical well being. These wellness programs have sucked all the fun out of any school celebration since no treats of any kind are allowed. Basically you can’t give a hungry kid a granola bar anymore. Even students who have yearly physicals are being weighed at school and told they are fat in very public ways. And to top it off school lunches are still awful. It’s time to be a little more realistic when it comes to developing wellness programs in schools. Mrs. Obama should put some initiatives together that are realistic, actually have a chance to be successful and might accomplish something.

Setting examples for good nutrition and exercise in schools:

- Provide nutritious and appealing meals for school lunches

- Have school lunches prepared at schools and use locally grown veggies and fruits whenever possible

- Build in more time for a relaxing meal

- Don’t have kids eat where they work even at snack time

- Allow wellness plans some flexibility, reasonable treats should be allowed on special occasions.

- Allow more time for recess, provide typical playground equipment and teach students how to organize typical playground games.

- Allow longer and flexible breaks after lunch especially for older students where they have some choice on how they manage their time

- Provide physical education classes at least 2-3 days a week, rather than focus on playing games, teach underlying skills, traditional and other types of exercises, how to organize games and provide cardio workouts.

- Provide an outdoor recess whenever possible.

- Encourage students to participate in community based opportunities to exercise, play organized sports or take lessons such as dance or gymnastics.

Thorough and Coordinated Core Evaluations Pay Off

**I learned so much about coordinating evaluations during this time period and I continue to follow the protocols set we set up with every team I work with. When you are thorough, coordinated and prepared you go to the table with confidence and a plan for the student that actually has a chance of working.

Several years ago I started a new job in s school district that was rumored to have some problems in their special education department. I was able to confirm the rumors almost immediately. While going through the caseload files I noticed that there were a high number of students who had gone out for their speech and language evaluation. Comparing dates I noticed that the outside speech and language evaluations were either part of the initial request or requested after a speech and language was completed through the school.

Now we have all had this happen. A parent or the team isn’t happy with our findings. This happens when we find problem and when we actually rule out problems. We’re never going to make everyone happy 100% of the time. However, something was different with the pattern I was seeing. There were just too many outside evaluations.

Reading the evaluations, it was easy to see why this happened. The evaluations done by the school speech language pathologist were void or any narrative or analysis. Scores were reported, ranges were given and summaries were sparse. Most of the school speech and language evaluations were 2 pages at best. The quality was poor and no supplemental testing was ever given. I wondered if the therapist was really that bad, never learned the right way to evaluate or just didn’t have time to do the job properly. I wondered how these reports were presented to the parents. Was the therapist able to go into more specific detail in the meetings? The IEP’s didn’t reflect this so I doubted that parents or teachers were given any more information. Basically the evaluations I read raised more questions than they answered.

Parents talk, even in large districts. It only takes one parent or team member to say something negative about a report for that opinion to spread. Pair that with a general lack of confidence in the school’s special education program and you can see how easily a situation like this may occurred. (I’ve seen poor evaluations from clinics and hospitals but somehow it doesn’t seem to sully their reputation as much.)

With the help of a dedicated staff and a strong team leader this particular school was able to turn around the perceptions of most of the parents. The first step in this process was to improve testing in all areas.

- The school administration supported more testing and meeting time, they were at the point where they realized it was cost effective.

- The team took the time to look over many report styles and picked the best formats and pieces from each one to help develop testing templates.

- Our program manager developed a uniform heading for our all written evaluations which immediately gave a more professional and coordinated look to our testing.

- While testing students, we collaborated with the other team members. The school psychologist often asked me to dig a little deeper in some areas. I always went to both the regular and the special education teachers to ask them what their biggest concerns were and if there was anything specific they wanted me to try and rule out.

Because of our efforts, we not only looked more professional and coordinated, we were more professional and coordinated. Parents were no longer confused when they left the meeting because everyone had their own different opinions. We did such a good job of coordinating our efforts that we rarely missed anything and our testing almost always dovetailed.

The meetings are another key factor to completing good evaluations. When reviewing testing, marathon meetings are a must. It takes a long time to review 3 or more evaluations thoroughly and to develop a good IEP. When schools take the time to answer parent concerns, parents view the schools as caring and personal. Sometimes we actually split the meetings into two if the reports were long and involved, developing the IEP a day or two later (if we had the time legally). This school system was dedicated to improving their evaluation process and hired substitutes so the teachers could stay for most if not all of the meeting. Nothing tells a parent you care less about their kid than leaving a meeting in the middle of it.

With some coordinated team effort and administrative support we were able to turn this particular situation around, keep testing in house and keep costs down. Our testing and our reports became more thorough and looked more professional than some of the previous outside evaluations. In some cases our testing was even better because we often knew the student prior to testing, we were able to include formal and informal observation, we gathered first hand information from parents and teachers to direct testing and we were able to see the kids in a familiar setting over a longer period of time.

Because we collaborated informally ahead of time

- Our recommendations, accommodations and service delivery were truly team decisions.

- We were able to look at all factors such as student need, teacher concern’s, parent’s concerns, other school demands, who would be responsible for accommodations and how to fit the needed services into the students day while developing the IEP.

- Our IEP’s were some of the best and most individual specific I had ever seen.

The outside evaluations we had on file contained recommendations and accommodations. However, they were often generic or grandiose. Suggested service delivery from outside evaluations did not take the school schedule, the child’s overall needs or other educational demands into consideration. We were able to suggest accommodations and modifications that were appropriate, realistic and effective.

I was very proud of the work we did in that school district over the three year period that this particular team worked together. I learned a lot. Watching parents perceptions change and confidence in the school grow was especially rewarding. We knew we had a lot to do with that. Our team leader problem solved and we implemented simple and very common sense changes that made us look good. Best of all the students ended up with an effective IEP. With teachers involved in the process they had an easier time following through on classroom accommodations and modifications. The teachers also knew they could come to us for support.

Did we keep 100% of our testing in house? No of course not but our percentage of in house evaluations shifted significantly with very few evaluations in any discipline completed outside of school. With simple and professional changes we were able to improve the way we did CORE evaluations without a new mandate or law. Our team leader took the talents and strengths of smart, caring professionals and gave us the time and tools to improve (not change) our evaluation process. Bottom line we were effective, we looked good and felt more professional.

NSSLHA at Assumption College Raising Awareness around Noise Induced Hearing Loss

This semester I have begun my journey in an Introduction to Audiology course. We have learned about a variety of forms of hearing loss and there is one that really stands out to me. This is Noise Induced Hearing Loss. This has become much more relevant with the increased use of ear buds. As many of you know, May is Better Speech and Hearing Month. At Assumption College, there is an annual spring concert in the beginning of May. The NSSLHA chapter at Assumption College has taken on the responsibility of spreading awareness of noise induced hearing loss around campus. We provide ear plugs for students and suggest that they wear them to the upcoming concert. This has become an important event for us on campus.

It is important that people know the damage that can be done when they expose themselves to noises over 85 dBs. Noise induced hearing loss is a sensorineural hearing loss. This means that there is dysfunction of the cochlea or auditory nerve. More specifically, noise induced hearing loss creates damage to the outer hair cells that line the cochlea. This can cause a hearing loss up to 120 dBs, which is a pretty significant hearing loss. It is important that people take certain precautions to prevent this damage. Some of the precautions that are recommended to people are to lower the volume on personal ear buds and to take occasional breaks when using ear buds. Using these precautions can help preserve ones hearing and reduce the risk of having noise induced hearing loss.

With all of the new technology that exists in our world today, people should know what the damage can be when these technologies are wrongly used. With Better Speech and Hearing Month, as well as our school concert quickly approaching, it is important for the NSSLHA chapter at Assumption College to spread awareness of Noise Induced Hearing Loss. Everyone should encourage these small changes and promote healthy hearing!

Use your earbuds wisely.

The School Speech Therapist

NSSLHA stands for the National Student Speech Language and Hearing Association. The college student equivalent of ASHA The American Speech Hearing and Language Association.

Otterbox is still the way to go…When using electronics with kids

Back in July I wrote an article on Protecting Your Electronics. I strongly suggested an Otterbox case for iPads, when working with children. I’ve used this case for almost a year and other than it being a little big and bulky, I am still very pleased with it.

A couple of weeks ago needed to contact Otterbox customer service because the stand up holder inside the removable top broke. I was still able to use it but it wouldn’t always stay in place. I believe I broke it not one of my students. I filled out a form on line and provided them with a picture of the broken piece. Within a week or so I had a new top for my iPad Otterbox case.

The procedure was simple, Otterbox kept in contact with me and the replacement part arrived on time. Next time I need a case for anything, I’ll be looking for an Otterbox.

Now they all come in such fun colors!

Q Global follow up

Last week I spoke with one of the tech people from Pearson involved with designing the Q Global scoring system. She contacted me in response to the letter I sent a few weeks back. I got the impression that her team is dedicated in making Q Global work for Speech Language Pathologists. We had a nice long chat and went over all of my concerns plus a few other concerns that other SLPs mentioned to me as a result of my initial post.

The biggest points I mentioned were that we really just want scores not necessarily a whole report and the fact that we word very piecemeal and might need some scores before we finish testing. We might not want to give the whole CELF or we often use other tests in combination with the CELF. Pearson understands that we often see children over several sessions and may need to go in and change scores/update scores/add the pragmatic information or want item analysis after our initial scoring.

Changes are in the works but because it is an online program updates take time. Pearson wants to make sure the changes are correct and they understand our needs before the update actually takes place. I believe she mentioned early February for the next update.

For now I am please with the response I received from Pearson. They have their concerns also. The biggest is, if changes are allowed will people go in and score other children to avoid paying the fee. Sure that probably will happen. However, in my opinion that’s the main reason why personal software is a better option.

I’m still not happy with the change to Q Global. Changes are hard but I did my own little study this week. I gave the CELF 5 to a student and it took me 13.5 minutes to score using Q Global. I also gave the CELF 4 and using my software at home it took me less than 3 minutes to score and generate a report. I needed to change something with the 5 and I couldn’t, I needed to add something to the 4 and it took only a few minutes. Right now I am seeing the Q Global as a step backwards. I’ve used the Q Global several times now, I am familiar with how it works so newness wouldn’t be the reason for my increased time. I did send an email to my contact at Pearson pointing out the time difference. With kids back to back we rarely have time to waste almost 15 minutes scoring, so we’re going to see this as one more thing we have to do at home.

With all that said, my contact at Pearson mentioned that there is a feedback button at the top of the Q Global home page. She encouraged me to use it. My guess is unless they hear from the masses, it will be difficult to justify the changes. So I encourage everyone to use that feedback button and let Pearson know the difficulty you have with Q Global.

My other new question to them was, is customer support available 24/7. If we can’t complete scoring tasks during the school day we might need extra support after hours. In my younger days I would be up extremely late working on reports.

Feel free to email me with any of your concerns (or use the feedback button). I will pass them along.

A Fun Little App

I created this using an app call Cloudart. My students had a lot of fun with it this week. I used it to work on categories, word retrieval and articulation. I purchased it for 99 cents through the iTunes store. This app is quick enough to use in a half hour session, even if the kids did some of the typing. It’s a fun little creative outlet for those of us who aren’t too creative. You can type in words or download a web sit like I did here. This is what appeared after I downloaded The School Speech Therapist into the app. In therapy the kids loved it and Cloudart will be something I keep on my iPad.

This one will be staying on my iPad!

Q-global scoring and the CELF-5

While I am fairly pleased with the changes to the CELF 5, I’ve found the Q-global scoring system to be very inflexible around the way Speech Language Pathologists work. I expressed my concerns to Pearson a few weeks ago and they suggested I follow up with a letter. Below is a copy of the letter I sent to ClinicalCustomerSupport@Pearson.com.

I would like to hear from other SLP’s who share my concerns or have other concerns with Q-global. If you feel the same way, please take the time to follow up with your own letter to Pearson. If you would like a copy of my letter to use as a template, please email me at theschoolspeechtherapist@gmail.com.

January 2, 2014

Dear Pearson,

A few weeks ago I contacted customer service to express my concerns with the Q-Global scoring system used to score the CELF-5. Your customer service representative mentioned that other SLPs have expressed similar concerns and that I should write a letter to follow up on our conversation. Basically, the Q-Global system is not user friendly with the way Speech Language Pathologists work. Currently I’ve been using my 10 free scorings that you offered until my school district sets up their account with you. While I’ve only tested 4 students, using Q-global I’ve burned through 6 of the free scorings.

There is always going to be a period of adjustment when learning a new system. Frankly at this point it is easier and quicker to look up scores than to use the Q-global but that will work itself out. However, it is clear to me from this scoring system that Pearson has little idea about how speech and language testing works and how SLPs work, especially within the public school system.

While much of what we do aligns with the school psychologist, our testing is very different. It’s been my experience to observe that school psychologists get most of their testing done in one maybe two sessions. Because of the variety of skills we assess, decreased attention span of some of our clients, limits of some of our clients, other factors we have to consider and most important our limited time available in the schools to test, we often have to complete our extensive testing over 3-5 shorter sessions. In the schools we have 45 days to complete our testing. It is not unusual to have the best of intentions and start testing right away but not finish testing until closer to the end of the 45 day period.

While the CELF-5 is comprehensive and provides some good composite scores, not all students receive all subtests. SLPs may want to give a few subtests to begin with, obtain certain scores (or observe manner of performance) then determine what other testing they’ll give. So here lies the main glitch with the Q-global and the CELF-5. In order to obtain scores on a few subtests to begin with, I have to generate a report to get those scores. Once I generate a report it will cost me another dollar to add or change any information. Considering that most SLP’s are extremely overwhelmed and work very piecemeal, Q-global scoring is going to be a very expensive proposition for schools and private therapists. Once schools realize how expensive Q-global scoring will be, they will discontinue access for their SLPs.

This is what I have experienced so far with Q-global:

Example 1: I gave the CELF-5, scored it out using Q-global, generated a report and put the file back in my bag to work on at a later date. The next time I picked up the file to work on, I noticed I entered some data incorrectly it cost a credit to fix that.

Example 2: I decided I needed to include an item analysis (item analysis is something I occasionally attach to my reports) but I had already generated the report to get the scores. I wasn’t sure if that cost me a credit but I found it very difficult to change the scoring from the raw score to the item analysis.

Example 3: As part of the CELF 5 evaluation process I asked one of my classroom teachers to fill out the pragmatic profile. I had most of the report scored and written up before that document was handed back to me. It did cost me a credit to add that information into Q-global and it took a lot of time to figure out how to do this.

I believe the Q-global system can work with the CELF-5 but you need to allow more time for SLPs to go in and change data. Given the way SLP’s work, at this time Q-global is not flexible enough for us. We need more time to go in and enter data, update date and frankly be able to correct mistakes if we make them. I guess if I have to I don’t mind paying $1 for each test scored but If I have to pay more than that per student for what ever reason, I will give up Q-global or maybe even give up the CELF-5.

I’ve been a fan of the CELF since the original came out. Using the scoring system provided with the CELF-4 made my life very easy. It was on my own computer, easy to use, saved time and was flexible with changes. Priced correctly, most SLPs using the CELF-5 would consider purchasing their own scoring system. I’m also not thrilled about giving over any of my data to Pearson. I am very careful about the student information I enter.

One other thing you should know. I can’t speak for every SLP out there but I’ve never known an SLP to print out the report generated by a scoring system and present that document at a meeting. SLP’s are usually just looking for scores and some item analysis. We tend to write very comprehensive narrative reports, incorporating a variety of test measures.

Thank you for taking the time to read this. I hope you will consider making some changes to the Q-global scoring for the CELF-5 to make it more user friendly for Speech Language Pathologists. If you have any specific questions or other concerns, please don’t hesitate to contact me.

Sincerely,

Teresa Sadowski MA/SLP-ccc

Teacher leaves teaching to fight common core

This is a link to an article on Diane Ravich’s blog.

I hope the powers that be start listening.

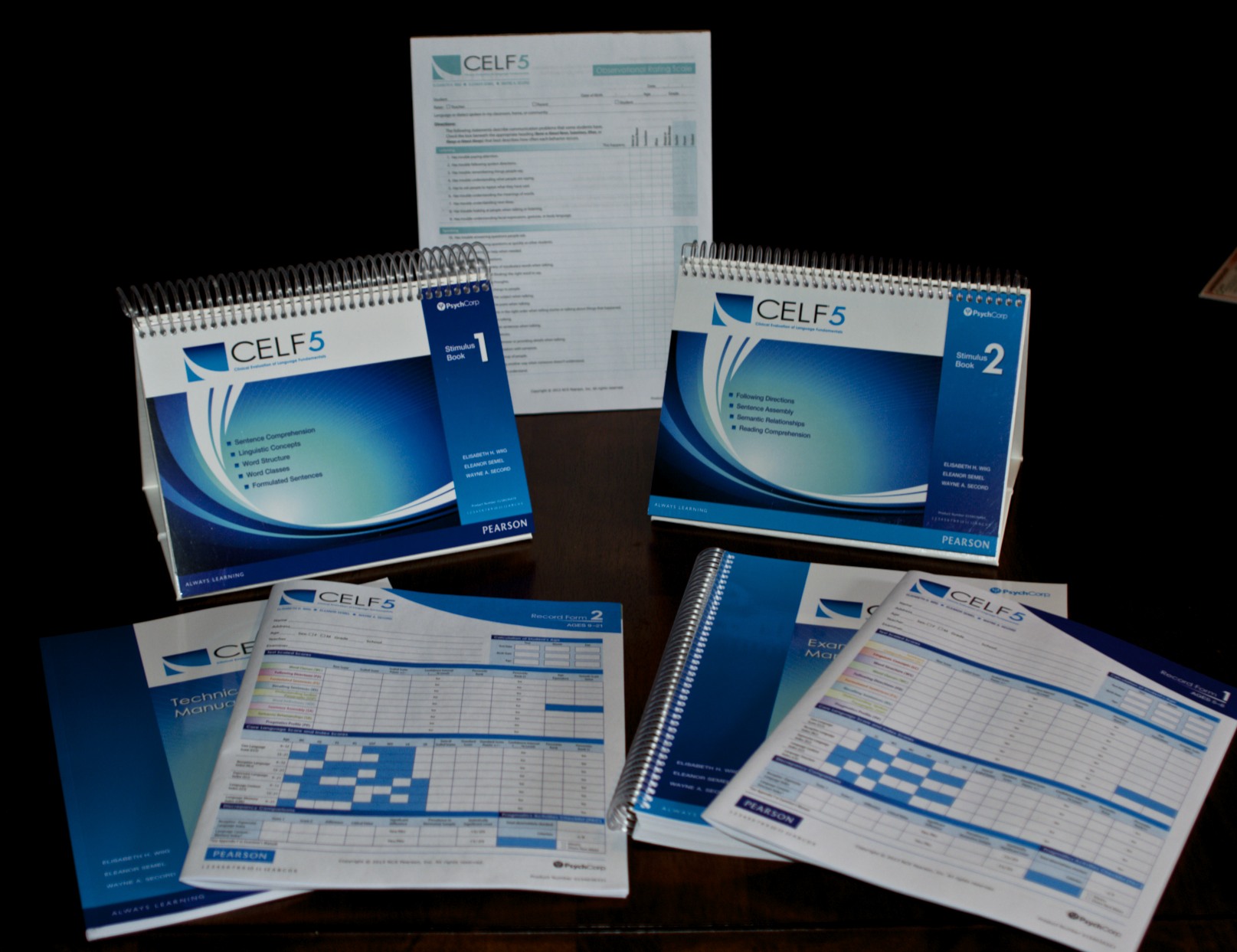

CELF 5: First Impression

The other day I was given a copy of the new CELF 5. As someone who has given the CELF since it was created, I was excited to hear a new update was in the works and even more excited when I found out in September that my administrators had purchased one for every school.

As I pulled the components out of the box and laid them along my dining room table, the main items that interested me were the protocol sheets. I assumed I could get an initial impression about the test just by perusing the protocol sheets. I also flipped through the stimulus books and noted some minor changes but a lot of familiar pictures.

My first impression is that they made some nice changes and adjusting to the changes will not be that challenging. Thank you Pearson and Authors because there was no reason to reinvent the wheel.

Looking at the 5-8 year old protocol sheet:

- Subtests are in a slightly different order.

- The old Sentence Structure subtest is now called Sentence Comprehension.

- The old Concepts and Following Directions subtest has been broken up into two separate subtests Linguistic Concepts and Following Directions but here is the best part both have a discontinue rule of 4 (instead of 7).For all of us who have purchased or made up cheat sheets……all the instructions are in the protocol books. No reason to get whip lash as we swing our heads from the back of the stimulus book to the front as we watch our little ones make their choices or encourage carpel tunnel from holding the cheat sheet and protocol together out of the prying eyes of little ones.

- Lots of bold font for general directions and correct answers. I wish the regular font was a little thicker or darker but that’s because of my old eyes.

- Quickly comparing the Pragmatic Profiles lots of similarities noted, the differences seem to be in how skills are worded. Perhaps the changes in the language will make it easier for teachers and parents to fill out. I will have to look at that a little more closely

- The addition of the Pragmatics Activities Checklist looks like a very good tool to help address manner of performance and some subjective pragmatic skills

- Expressive Vocabulary subtest is gone. Which really is ok since most of us use other vocabulary testing. It was small pain to give just to get a composite score but occasionally came in handy. I was actually hoping they would beef up the vocabulary portion to include expressive vocabulary. However, it is nice to use a variety of testing material with students. I think using a variety of tests gives you a better overall profile.

- Phonological Awareness and Word Association have been omitted. Never used those much anyway, preferring the CTOPP for PA and only used word association with lower functioning kids.

- Number Repetition and Familiar Sequences have also been omitted. I never used those subtests very much since my school psychologist always did similar subtests. Plus we always have the TAPS.

- Rapid Automatic Naming has been omitted. Not a big issue with the little ones for me but a big thumbs down for the older kids (see below).

Looking at the 9-21 year old protocol sheet

- Subtests are in slightly different order

- Concepts and Following Directions is now called Following Directions. All directions are in the protocol sheets. However, I could have used some bold print on the Following Directions. The symbols on the protocol sheet are bigger and limited to circle/square/triangle/X. I think I like the changes to the wording.

- There is more space to write the sentences on the Formulated Sentences Subtest.

- The Understand Spoken Paragraphs, paragraphs have changed slightly. There are 1-3 more questions per paragraph. I like that. However, I’ve been running into an interesting pattern with my student’s responses which has been effecting their performance in some cases. Worrying so much about restating the question that they forget the information. (Gee wonder why that is happening?)

- Phonological Awareness and Word Association have been omitted. Never used those much anyway, preferring the CTOPP for PA and only used word association with lower functioning kids.

- Number Repetition and Familiar Sequences have also been omitted. I never used those subtests very much since my school psychologist always did similar subtests.

- Quickly comparing the Pragmatic Profiles lots of similarities noted, the differences seem to be in how skills are worded. Perhaps the changes in the language will make it easier for teachers and parents to fill out

- The addition of the Pragmatics Activities Checklist looks like a very good tool to help address manner of performance and some subjective pragmatic skills

- Rapid Automatic Naming is gone. I am disappointed with that because I often used that subtest to help confirm word retrieval issues. I will probably continue to use that subtest either for my own information or as part of the report noting that it is outdated.

One thing I did notice is that the Item Analysis for each subtest is listed in the protocol sheet. I think that will be helpful when it comes to writing the narrative for each subtest. However, I hope we are able to plug in the correct and incorrect responses in the Q global scoring system to get a list. I occasionally added item analysis to my reports for the Concepts and Following directions subtest.

Scoring…extremely disappointed that we have to go through Q-global and that a fee is charged for every test. Personally I would rather pay for the software and have it on my computer. Sometimes I don’t do all my scoring and writing at once. It better be user friendly that way. My school system is setting up a system for scoring, which I am glad. I’ll have to set up my own for any private practice or consulting. I hope it is easy to use and we can get similar analysis. Note that many of the discontinue rules for individual subtests have changed, for the better I think.

I also noticed that we are going to be able to compare receptive/expressive differences and determine if they are statistically significant. I think that will be a nice addition to the report. I hope the manual give us guidance as how to interpret a difference.

I also have packets for reading and writing supplements. I’m not even going to crack those open yet. At my school the special education teachers tend to take reading and writing on, as they should. If there is a specific issue where we need more data then I will consider giving the reading and writing pieces. Once I get the language pieces of the CELF 5 under my belt then I will look at the reading and writing part of the test more closely. I can’t be all things to all people but I am glad to see it was included but separate.

With all that said my first impression of the CELF 5 is a thumbs up. It should be fairly easy to create a new evaluation template. Hopefully the manual will also give some good information. Once I start using the new CELF 5 and analyze the data a few times, I’ll write another review. It will be interesting to see if the same type of kids are qualifying. I hope and pray the standards have not been lowered. I’d really like to hear from other therapists who are using the CELF 5. What do you think in terms of ease of giving it and overall results? I’m also curious to know how your goals and objectives line up with the CELF 5 and Common Core. Specific examples are welcomed.